Quantization using bitsandbytes

‘bitsandbytes’ is a tool to reduce model size using 8-bit and 4-bit quantization. This improves memory usage and can help fit large models into available hardware. In this blog, we describe the process for the free colab platform.

Installation

We need to install transformers, accelerate and bitsandbytes.

pip install transformers accelerate bitsandbytes -qUThe ‘accelerate’ library is needed when using ‘bitsandbytes’ for several reasons:

- Efficient GPU Utilization: ‘accelerate’ helps in distributing the model’s computation efficiently across multiple GPUs. This is particularly useful when dealing with large models that require significant memory and processing power. By using `accelerate`, you can ensure that the quantized models are loaded and run in a way that maximizes the available hardware resources.

- Model Loading and Configuration: When quantizing models with ‘bitsandbytes’, the ‘

from_pretrained()’ method from the ‘transformers’ library can take advantage of ‘accelerate’ to manage the loading of models. This includes handling different device maps and optimizing the placement of model parts (e.g., offloading parts of the model to the CPU if needed). - Compatibility: Many advanced features in the Hugging Face ecosystem, including those used for model quantization and optimization, are built to work seamlessly with ‘accelerate’. It ensures compatibility and smooth integration when configuring and running quantized models.

In summary, ‘accelerate’ provides the necessary infrastructure for effectively using and deploying quantized models, especially when dealing with complex hardware setups and large models.

You also need to import PyTorch.

import torchBreakdown of the process of 8-bit quantization

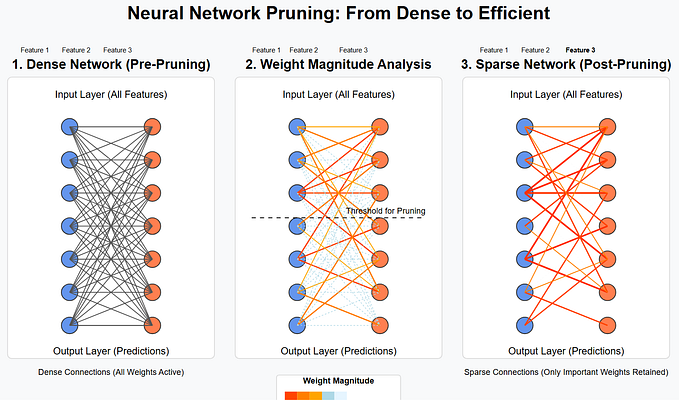

Quantization is a technique used to reduce the precision of the weights and biases used in a model, which helps in reducing the model’s memory footprint and improving computational efficiency.

The weights and biases of a model could be classified as outliers and non-outliers:

- Outliers are data points or weights in the model that have unusually high or low values compared to the rest of the data. These outliers are retained in 16-bit floating point format, for higher precision.

- Non-Outliers are the more typical data points or weights that fall within a common range of values. These are converted to 8-bit integer format.

After the initial quantization step, the non-outlier values (now in int8) are converted back to fp16. This conversion allows the model to use a uniform precision format (fp16) during computation, which simplifies the processing and integration of different weights.

By keeping outlier values in fp16, the model retains precision for the most critical weights, which helps maintain accuracy. Using int8 for most weights (non-outliers) reduces memory usage and speeds up computation. This method strikes a balance between reducing memory/computational load and maintaining model accuracy, thus optimizing overall performance.

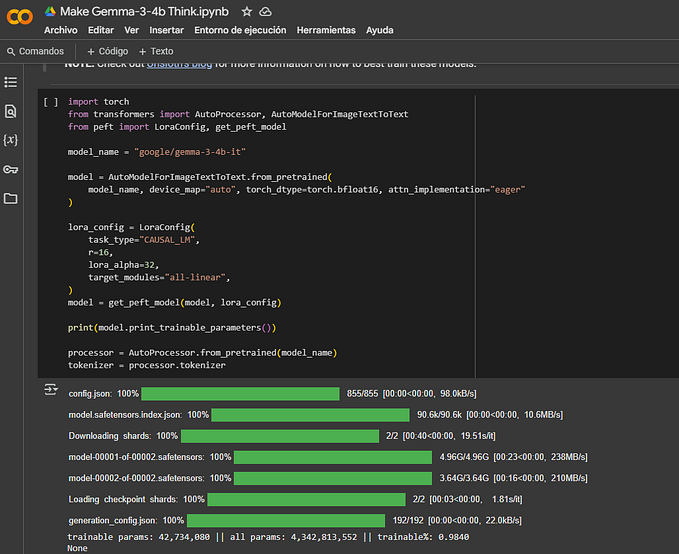

Here is the code to quantize a model using bitsandbytes:

from transformers import AutoModelForCausalLM, BitsAndBytesConfig

quantization_config = BitsAndBytesConfig(load_in_8bit=True)

model_8bit = AutoModelForCausalLM.from_pretrained(

"facebook/opt-350m",

quantization_config=quantization_config,

torch_dtype=torch.float32

)torch.float32 above, specifies that the parts of the model that are not quantized be retained in float32. Apart from the layers being quantized, the model contains various other modules and parameters (e.g., normalization layers like torch.nn.LayerNorm, embeddings, etc.). Quantization often targets specific parts of the model (e.g. Linear layers) where memory savings and computational efficiency are most beneficial.

You can check the memory footprint of the quantized model as follows:

print(model_8bit.get_memory_footprint())Summary

Using bitsandbytes, you can significantly reduce the memory footprint of models via 8-bit and 4-bit quantization. This involves adjusting configurations, offloading, and potentially dequantizing when necessary.