Finetuning mistral 7b using Unsloth

Unsloth is a project that allows you to finetune Llama 3, Mistral, Gemma and other Large Language Models with less memory and time.

For the purposes of this tutorial, we will be using Google Colab. (remember to allocate a GPU instance for the Colab notebook.)

first install unsloth, xformers, trl, peft, accelerate, bitsandbytes, triton and pytorch

!pip install "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git"

!pip install --no-deps xformers trl peft accelerate bitsandbytes triton, torchimport the necessary packages.

from unsloth import FastLanguageModel

import torchsetting some basic hyperparameter values,

max_seq_length = 2048

dtype = None

load_in_4bit = True- for

max_seq_lengththe value can be any value since unsloth supports RoPE scaling internally. dtypecan be set toNoneso that unsloth can auto detect the correct value for it. (Float16 for Tesla T4, V100, Bfloat16 for Ampere+- setting

load_in_4bit = Truehere makes sure that you only have to change the value of this parameter here rather than going to every other location that this parameter is used and changing each one of them one by one.

We are using unsloth’s own 4bit quantized mistral-7B model because of GPU limitations in the Colab instance.

fourbit_models = [

"unsloth/mistral-7b-v0.3-bnb-4bit", # New Mistral v3 2x faster!

"unsloth/mistral-7b-instruct-v0.3-bnb-4bit",

"unsloth/llama-3-8b-bnb-4bit", # Llama-3 15 trillion tokens model 2x faster!

"unsloth/llama-3-8b-Instruct-bnb-4bit",

"unsloth/llama-3-70b-bnb-4bit",

"unsloth/Phi-3-mini-4k-instruct", # Phi-3 2x faster!

"unsloth/Phi-3-medium-4k-instruct",

"unsloth/mistral-7b-bnb-4bit",

"unsloth/gemma-7b-bnb-4bit", # Gemma 2.2x faster!

]these are the latest models available out of the box by unsloth.

Now we instantiate the model and it’s tokenizer,

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = "unsloth/mistral-7b-instruct-v0.2-bnb-4bit", # Choose ANY! eg teknium/OpenHermes-2.5-Mistral-7B

max_seq_length = max_seq_length,

dtype = dtype,

load_in_4bit = load_in_4bit,

)huggingface tokens can also be passed while instantiating the model, this is particularly helpful if we are trying to finetune gated models.

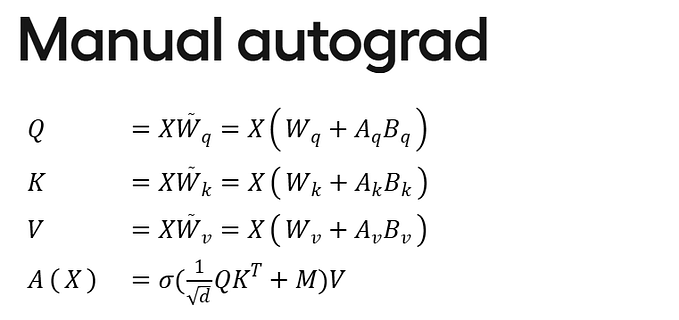

Now we take the peft version of our model,

model = FastLanguageModel.get_peft_model(

model,

r = 16, # Choose any number > 0 ! Suggested 8, 16, 32, 64, 128

target_modules = ["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj",],

lora_alpha = 16,

lora_dropout = 0, # Supports any, but = 0 is optimized

bias = "none", # Supports any, but = "none" is optimized

# [NEW] "unsloth" uses 30% less VRAM, fits 2x larger batch sizes!

use_gradient_checkpointing = "unsloth", # True or "unsloth" for very long context

random_state = 3407,

use_rslora = False, # We support rank stabilized LoRA

loftq_config = None, # And LoftQ

)After which we have to define a formatting function to format the sentences which is to be sent to the model.

prompt = """Below given is an input comment that needs to be converted to a vague but correct target comment.

### Input Comment:

{}

### Target Comment:

{}

"""

EOS_TOKEN = tokenizer.eos_token # Must add EOS_TOKEN

def formatting_prompts_func(examples):

inputs = examples["input"]

targets = examples["target"]

texts = []

for input, target in zip(inputs, targets):

# Must add EOS_TOKEN, otherwise your generation will go on forever!

text = prompt.format(input, target) + EOS_TOKEN

texts.append(text)

return { "text" : texts, }

The data can be prepared in any way that the user sees fit. Here I have decided to read from a csv file and then convert the csv to a dictionary, such that the keys are the names of the columns and the value corresponding each key is a list of my text data.

data_dict = {}

with open(data_file_path, mode='r', newline='') as input_file:

csv_reader = csv.reader(input_file)

data_dict["input"] = []

data_dict["target"] = []

for items in csv_reader:

data_dict["target"].append(items[0])

data_dict["input"].append(items[1])Then we load the data using the datasets library from the dictionary that was created in the previous step and map the sentences in the dataset to the formatting function, so that we add a column that combines the information in the columns of the dataset and then adds a column to the dataset so that the trainer can get the correctly formatted version of the training input for each row of the dataset by looking at each entry of the newly added column.

from datasets import Dataset

dataset = Dataset.from_dict(data_dict)

dataset = dataset.map(formatting_prompts_func, batched=True)Now we define the trainier, we will be using the SFTTrainer ( Supervised Finetuning ) available in the transformers library. Unsloth also supports the DPOTrainer.

from trl import SFTTrainer

from transformers import TrainingArguments

from unsloth import is_bfloat16_supported

trainer = SFTTrainer(

model = model,

tokenizer = tokenizer,

train_dataset = dataset,

dataset_text_field = "text",

max_seq_length = max_seq_length,

dataset_num_proc = 2,

packing = False, # Can make training 5x faster for short sequences.

args = TrainingArguments(

per_device_train_batch_size = 2,

gradient_accumulation_steps = 4,

warmup_steps = 5,

num_train_epochs = 7,

# max_steps = 60, # Set num_train_epochs = 1 for full training runs

learning_rate = 2e-4,

fp16 = not is_bfloat16_supported(),

bf16 = is_bfloat16_supported(),

logging_steps = 816,

optim = "adamw_8bit",

weight_decay = 0.01,

lr_scheduler_type = "linear",

seed = 3407,

output_dir = "path/to/where/you/what/the/checkpoints/to/be/saved",

),

)Finally we train the model using,

trainer_stats = trainer.train()Note: make sure to read the comments written with the code, this too contains pertinent data. Also make sure to read the code and make necessary changes for the file path and

Inference can be done on the model by using the following,

from transformers import TextStreamer

streamer = TextStreamer(tokenizer)

FastLanguageModel.for_inference(model) # Enable native 2x faster inference

inputs = tokenizer(

[

prompt.format(

"You are generally not generous. In many ways you are selfish.", # input

"" #target

)

], return_tensors = "pt").to("cuda")

_ = model.generate(**inputs, streamer=streamer, max_new_tokens = 256, use_cache = True)

# outputs = model.generate(**inputs, max_new_tokens = 256, use_cache = True)you can use the TextStreamer class from huggingface or wait for the model to generate the complete text.

Also note that the prompt mentioned in the above code block was previously defined further up in the tutorial.